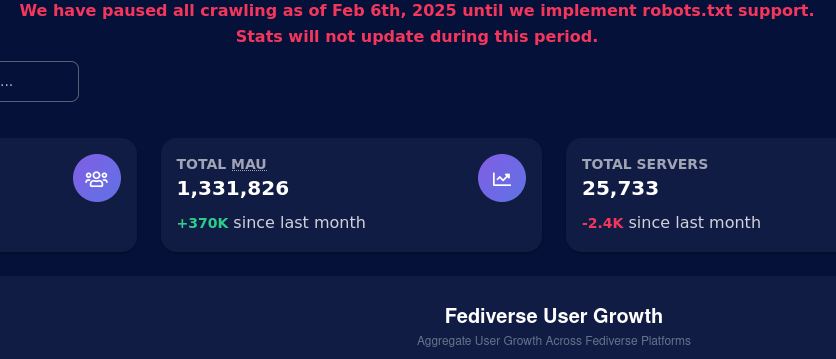

FediDB has stopped crawling until they get robots.txt support

lemmy.world/pictrs/image/4281c731-b98c-4e63-bd6…

submitted by mesamune edited

We have paused all crawling as of Feb 6th, 2025 until we implement robots.txt support. Stats will not update during this period.

FOSSDLE PieFed

FOSSDLE PieFed

Forced to use https://lemmy.fediverse.observer/list to see which instances are the most active

This looks more accurate than fedidb TBH. The initial serge from reddit back in 2023. The slow fall of active members. I personally think the reason the number of users drops so much is because certain instances turn off the ability for outside crawlers to get their user info.

Robots.txt is a lot like email in that it was built for a far simpler time.

It would be better if the server could detect bots and send them down a rabbit hole rather than trusting randos to abide by the rules.

It was built for the living, free internet.

For all ita dark corners, it was better than what we have now.

Because of AI bots ignoring robots.txt (especially when you don't explicitly mention their user-agent and rather use a * wildcard) more and more people are implementing exactly that and I wouldn't be surprised if that is what triggered the need to implement robots.txt support for FediDB.

Already possible: Nepenthes.

I’m sold

that website feels like uncovering a piece of ancient alien weaponry

Ooh, nice.

Banned

While I agree with you, the quantity of robots has greatly increased of late. While still not as numerous as users, they are hitting every link and wrecking your caches by not focusing on hotspots like humans do.

Banned

Sure thing! Help me pay for it?

False positives? Meh who cares ... That's what appeals are for. Real people should realize after not too long

Well, they needed to stope. Stope, I said. Lest thy carriage spede into the crosseth-rhodes.

We can't afford to wait at every sop, yeld, or one vay sign!

Whan that Aprill with his shoures soote

Did someone complain? Or why stop?

No idea honestly. If anyone knows, let us know! I dont think its necessarily a bad thing, If their crawler was being too aggressive, then it can accidentally DDOS smaller servers. Im hoping that is what they are doing and respecting the robot.txt that some sites have.

Gotosocial has a setting in development that is designed to baffle bots that don't respect robots.txt. FediDB didn't know about that feature and thought gotosocial was trying to inflate their stats.

In the arguments that went back and forth between the devs of the apps involved, it turns out that FediDB was ignoring robots.txt. ie, it was badly behaved

Interesting! Is this over a Git issue somewhere? That could explain quite a bit.

Yep!

might be in relates to issue link here

It was a good read, personally speaking I think it probably would have just been better off to block gotosocial(if that's possible since if seems stuff gets blocked when you check it) until proper robot support was provided I found it weird that they paused the entire system.

Being said, if I understand that issue correctly, I fall under the stand that it is gotosocial that is misbehaving. They are poisoning data sets that are required for any type of federation to occur(node info, v1 and v2 statistics), under the guise that they said program is not respecting the robots file. Instead arguing that it's preventing crawlers, where it's clear that more than just crawlers are being hit.

imo this looks bad, it defo puts a bad taste in my mouth regarding the project. I'm not saying an operator shouldn't have to listen to a robots.txt, but when you implement a system that negatively hits third party, the response shouldn't be the equivalent of sucks to suck that's a you problem, your implementation should either respond zero or null, any other value and you are just being abusive and hostile as a program

Thank you for providing the link. Im actually on GoToSocials side on this particular one. But I wish both sides would have communicated a bit more before this got rolled out.

I think it's just one HTTP request to the nodeinfo API endpoint once a day or so. Can't really be an issue regarding load on the instances.

It's not about the impact it's about consent.

True. Question here is: if you run a federated service... Is that enough to assume you consent to federation? I'd say yes. And those Mastodon crawlers and statistics pages are part of the broader ecosystem of the Fediverse. But yeah, we can disagree here. It's now going to get solved technically.

I still wonder what these mentioned scrapers and crawlers do. And the reasoning for the people to be part of the Fediverse but at the same time not be a public part of the Fediverse in another sense... But I guess they do other things on GoToSocial than I do here on Lemmy.

Why invent implied consent when complicit explicit has been the standard in robots.txt for ages now?

Legally speaking there's nothing they can do. But this is about consent, not legality. So why use implied?

If she says yes to the marriage that doesn't mean she permanently says yes to sex. I can run a fully air gapped "federated" instance if I want to

its too bad too with the recent reddit activity.

lol FediDB isn't a crawler, though. It makes API calls.

They do have a dedicated "Crawler" page.

And they do mention there that they use a website crawler for their Developer Tools and Network features.

Maybe the definition of the term "crawler" has changed but crawling used to mean downloading a web page, parsing the links and then downloading all those links, parsing those pages, etc etc until the whole site has been downloaded. If there were links going to other sites found in that corpus then the same process repeats for those. Obviously this could cause heavy load, hence robots.txt.

Fedidb isn't doing anything like that so I'm a bit bemused by this whole thing.

https://lemmyverse.net/ still crawling, baby. 🤘